O LVM (Logical Volume Manager) nada mais é do que um gerenciador inteligente de discos que trabalha através de volumes gerenciados pelo kernel linux, seu objetivo é a flexibilidade para que possamos adicionar, remover ou redimensionar volumes hot swap, ele pode trabalhar agrupando vários discos criando um ou vários volumes semelhante ao que estamos acostumados com storage usando RAID0 ou RAID1.

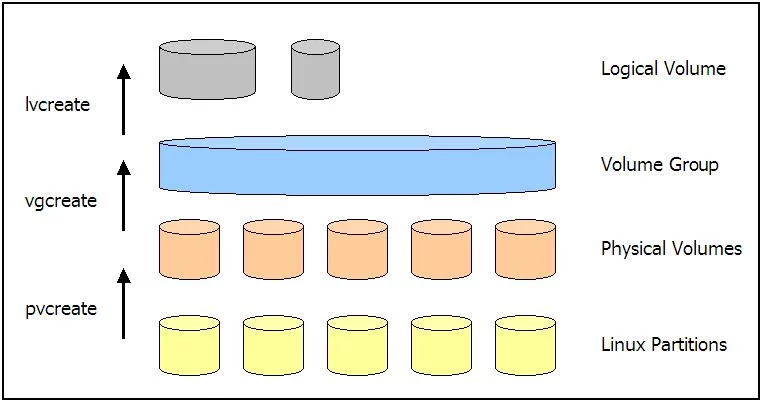

- Estrutura do LVM

- Definições

1- Physical Volumes: Disco fisco utilizado para criação dos volumes, pode ser um disco físico ou uma partição.

2 – Volume Groups: Utilizado para criação de grupos que podem conter um ou vários discos PVS.

3 – Logical Volumes: Volumes lógicos que são associados a um volume group e são formatados de acordo com o file system usado, xfs ou ext4.

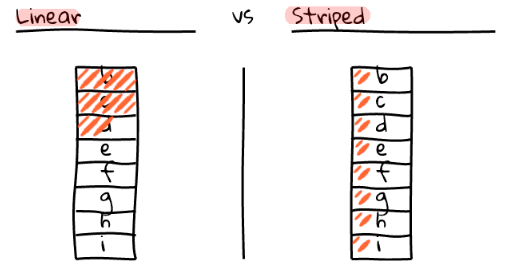

LVM : Striped vs Linear

Por default quando estamos aprovisionando nossos discos com LVM, estaremos de forma implícita utilizando a opção linear com agregação de volumes físicos em um volume logico sequencial, abaixo a imagem linear demonstra que iremos preencher nossos discos sequencialmente.

Ao utilizar a opção do LVM com striped (distribuído) iremos nos beneficiar da eficiência de IO lendo e escrevendo de forma balanceada em todo o conjunto de discos físicos que aprovisionamos no LVM, o ponto negativo ao utilizar o striped é que iremos perder espaço em disco em detrimento da performance, abaixo na imagem podemos verificar a utilização paralela de todos os discos de b ate i.

Striped vs Linear

Para esta demonstração com lvm linear e striped, iremos utilizar 4 discos de 10gb com o sistema operacional Oracle Linux 7.9.

[root@srv01 ~]# cat /etc/*-release | grep PRETTY

PRETTY_NAME="Oracle Linux Server 7.9"

[root@srv01 ~]# uname -a

Linux srv01 5.4.17-2136.315.5.el7uek.x86_64 #2 SMP Wed Dec 21 19:57:57 PST 2022 x86_64 x86_64 x86_64 GNU/Linux

[root@srv01 ~]#

Demonstração utilizando LVM Linear

Discos:

[root@srv01 ~]# ls -l /dev/sd[a-z]

brw-rw---- 1 root disk 8, 0 Mar 28 09:43 /dev/sda

brw-rw---- 1 root disk 8, 16 Mar 28 09:43 /dev/sdb

brw-rw---- 1 root disk 8, 32 Mar 28 09:43 /dev/sdc

brw-rw---- 1 root disk 8, 48 Mar 28 09:43 /dev/sdd

brw-rw---- 1 root disk 8, 64 Mar 28 09:43 /dev/sde

brw-rw---- 1 root disk 8, 80 Mar 28 09:43 /dev/sdf

brw-rw---- 1 root disk 8, 96 Mar 28 09:43 /dev/sdg

brw-rw---- 1 root disk 8, 112 Mar 28 09:43 /dev/sdh

brw-rw---- 1 root disk 8, 128 Mar 28 09:43 /dev/sdi

[root@srv01 ~]# fdisk -l | grep "/dev/s"

Disk /dev/sda: 107.4 GB, 107374182400 bytes, 209715200 sectors

/dev/sda1 * 2048 2099199 1048576 83 Linux

/dev/sda2 2099200 209715199 103808000 8e Linux LVM

Disk /dev/sdb: 10.7 GB, 10737418240 bytes, 20971520 sectors

Disk /dev/sdc: 10.7 GB, 10737418240 bytes, 20971520 sectors

Disk /dev/sdd: 10.7 GB, 10737418240 bytes, 20971520 sectors

Disk /dev/sde: 10.7 GB, 10737418240 bytes, 20971520 sectors

Disk /dev/sdf: 10.7 GB, 10737418240 bytes, 20971520 sectors

Disk /dev/sdg: 10.7 GB, 10737418240 bytes, 20971520 sectors

Disk /dev/sdh: 10.7 GB, 10737418240 bytes, 20971520 sectors

Disk /dev/sdi: 10.7 GB, 10737418240 bytes, 20971520 sectors

Criando o PV (Physical volumes) com os discos de 10GB:

[root@srv01 ~]# pvcreate /dev/sd[b-e]

Physical volume "/dev/sdb" successfully created.

Physical volume "/dev/sdc" successfully created.

Physical volume "/dev/sdd" successfully created.

Physical volume "/dev/sde" successfully created.

Listando os PVS PV (Physical volumes) criados:

[root@srv01 ~]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda2 ol lvm2 a-- <99.00g 0

/dev/sdb lvm2 --- 10.00g 10.00g

/dev/sdc lvm2 --- 10.00g 10.00g

/dev/sdd lvm2 --- 10.00g 10.00g

/dev/sde lvm2 --- 10.00g 10.00g

Criando o volume group (VG) vg00:

[root@srv01 ~]# vgcreate vg00 /dev/sd[b-e]

Volume group "vg00" successfully created

Listando o volume vg00 group criado:

[root@srv01 ~]# vgs

VG #PV #LV #SN Attr VSize VFree

ol 1 2 0 wz--n- <99.00g 0

vg00 4 0 0 wz--n- 39.98g 39.98g

Criando o volume logico data utilizando 100% do espaço disponível dos discos:

[root@srv01 ~]# lvcreate -n data -l 100%FREE vg00

Logical volume "data" created.

Listando o volume logico criado:

[root@srv01 ~]# lvdisplay

--- Logical volume ---

LV Path /dev/vg00/data

LV Name data

VG Name vg00

LV UUID VOonGc-ieAK-498e-RDet-BshH-XMFV-Q7aDib

LV Write Access read/write

LV Creation host, time srv01, 2023-03-28 09:35:33 -0300

LV Status available

# open 0

LV Size 39.98 GiB

Current LE 10236

Segments 4

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 252:2

Formatando o lvm com xfs:

[root@srv01 ~]# mkfs.xfs /dev/vg00/data

meta-data=/dev/vg00/data isize=256 agcount=4, agsize=2620416 blks

= sectsz=512 attr=2, projid32bit=1

= crc=0 finobt=0, sparse=0, rmapbt=0

= reflink=0

data = bsize=4096 blocks=10481664, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=5118, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

Adicionar o volume no fstab e montar no /data:

[root@srv01 ~]# blkid /dev/vg00/data

/dev/vg00/data: UUID="4ccf5732-eefc-41f4-ac39-2d364ffa82c8" TYPE="xfs"[root@srv01 ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Thu Feb 2 08:07:25 2023

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/ol-root / xfs defaults 0 0

UUID=d1a86827-1c89-43a3-917d-cd54d5272c50 /boot xfs defaults 0 0

/dev/mapper/ol-swap swap swap defaults 0 0

UUID=4ccf5732-eefc-41f4-ac39-2d364ffa82c8 /data xfs defaults 0 0

[root@srv01 ~]# mkdir /data

[root@srv01 ~]#

[root@srv01 ~]# mount -a

[root@srv01 ~]#

[root@srv01 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 329M 0 329M 0% /dev

tmpfs 344M 0 344M 0% /dev/shm

tmpfs 344M 5.1M 339M 2% /run

tmpfs 344M 0 344M 0% /sys/fs/cgroup

/dev/mapper/ol-root 91G 3.1G 88G 4% /

/dev/sda1 1014M 267M 748M 27% /boot

tmpfs 69M 0 69M 0% /run/user/0

/dev/mapper/vg00-data 40G 33M 40G 1% /data <==================================

Verificando se o LVM que criamos realmente é linear:

[root@srv01 ~]# lvs --segments

LV VG Attr #Str Type SSize

root ol -wi-ao---- 1 linear <91.00g

swap ol -wi-ao---- 1 linear 8.00g

data vg00 -wi-ao---- 1 linear <10.00g

data vg00 -wi-ao---- 1 linear <10.00g

data vg00 -wi-ao---- 1 linear <10.00g

data vg00 -wi-ao---- 1 linear <10.00g

Após a montagem do LVM vg00-data, verificamos que ao utilizar o LVM linear todos os discos foram agregados totalizando 40gb disponíveis para uso.

Demonstração utilizando LVM Striped

Criando o PV (Physical volumes) com os discos de 10gb:

[root@srv01 ~]# pvcreate /dev/sd[f-i]

Physical volume "/dev/sdf" successfully created.

Physical volume "/dev/sdg" successfully created.

Physical volume "/dev/sdh" successfully created.

Physical volume "/dev/sdi" successfully created.

Listando os PVS PV (Physical volumes) criados:

[root@srv01 ~]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda2 ol lvm2 a-- <99.00g 0

/dev/sdb vg00 lvm2 a-- <10.00g 0

/dev/sdc vg00 lvm2 a-- <10.00g 0

/dev/sdd vg00 lvm2 a-- <10.00g 0

/dev/sde vg00 lvm2 a-- <10.00g 0

/dev/sdf lvm2 --- 10.00g 10.00g

/dev/sdg lvm2 --- 10.00g 10.00g

/dev/sdh lvm2 --- 10.00g 10.00g

/dev/sdi lvm2 --- 10.00g 10.00g

Criando o volume group (VG) vg01:

[root@srv01 ~]# vgcreate vg01 /dev/sd[f-i]

Volume group "vg01" successfully createdListando o volume vg01 group criado:

[root@srv01 ~]# vgs

VG #PV #LV #SN Attr VSize VFree

ol 1 2 0 wz--n- <99.00g 0

vg00 4 1 0 wz--n- 39.98g 0

vg01 4 0 0 wz--n- 39.98g 39.98gCriando o volume logico oradata utilizando 100% do espaço disponível dos discos com o tipo Striped:

lvcreate --extents 100%FREE --stripes {Numero físico de discos} --name{LV_NAME} {VG_NAME}[root@srv01 ~]# lvcreate --extents 100%FREE --stripes 4 --name oradata vg01

Using default stripesize 64.00 KiB.

Logical volume "oradata" created.Listando o volume logico criado:

[root@srv01 ~]# lvdisplay

--- Logical volume ---

LV Path /dev/vg01/oradata

LV Name oradata

VG Name vg01

LV UUID 2A05vP-mAGA-Dl1G-03IK-uys0-ndlc-irJIbj

LV Write Access read/write

LV Creation host, time srv01, 2023-03-28 10:01:03 -0300

LV Status available

# open 0

LV Size 39.98 GiB

Current LE 10236

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 252:3Formatando o lvm com xfs:

[root@srv01 ~]# mkfs.xfs /dev/vg01/oradata

meta-data=/dev/vg01/oradata isize=256 agcount=16, agsize=655088 blks

= sectsz=512 attr=2, projid32bit=1

= crc=0 finobt=0, sparse=0, rmapbt=0

= reflink=0

data = bsize=4096 blocks=10481408, imaxpct=25

= sunit=16 swidth=64 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=5120, version=2

= sectsz=512 sunit=16 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0Adicionar o volume no fstab e montar no /data:

[root@srv01 ~]# blkid /dev/vg01/oradata

/dev/vg01/oradata: UUID="ef475d94-e8d7-455a-9b4d-89cf8e6521a3" TYPE="xfs"[root@srv01 ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Thu Feb 2 08:07:25 2023

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/ol-root / xfs defaults 0 0

UUID=d1a86827-1c89-43a3-917d-cd54d5272c50 /boot xfs defaults 0 0

/dev/mapper/ol-swap swap swap defaults 0 0

UUID=4ccf5732-eefc-41f4-ac39-2d364ffa82c8 /data xfs defaults 0 0

UUID=ef475d94-e8d7-455a-9b4d-89cf8e6521a3 /oradata xfs defaults 0 0[root@srv01 ~]# mkdir -p /oradata

[root@srv01 ~]#

[root@srv01 ~]# mount -a

[root@srv01 ~]#

[root@srv01 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 329M 0 329M 0% /dev

tmpfs 344M 0 344M 0% /dev/shm

tmpfs 344M 5.2M 339M 2% /run

tmpfs 344M 0 344M 0% /sys/fs/cgroup

/dev/mapper/ol-root 91G 3.1G 88G 4% /

/dev/sda1 1014M 267M 748M 27% /boot

/dev/mapper/vg00-data 40G 33M 40G 1% /data

tmpfs 69M 0 69M 0% /run/user/0

/dev/mapper/vg01-oradata 40G 33M 40G 1% /oradata <=======================================

Verificando se o LVM que criamos realmente é Striped:

[root@srv01 ~]# lvs --segments

LV VG Attr #Str Type SSize

root ol -wi-ao---- 1 linear <91.00g

swap ol -wi-ao---- 1 linear 8.00g

data vg00 -wi-ao---- 1 linear <10.00g <=============== LINEAR

data vg00 -wi-ao---- 1 linear <10.00g <=============== LINERAR

data vg00 -wi-ao---- 1 linear <10.00g <=============== LINEAR

data vg00 -wi-ao---- 1 linear <10.00g <=============== LINEAR

oradata vg01 -wi-ao---- 4 striped 39.98g <=============== STRIPED <===========================Informações sobre o LVM criados com linear e striped:

[root@srv01 ~]# lvdisplay -m

--- Logical volume ---

LV Path /dev/vg00/data

LV Name data

VG Name vg00

LV UUID VOonGc-ieAK-498e-RDet-BshH-XMFV-Q7aDib

LV Write Access read/write

LV Creation host, time srv01, 2023-03-28 09:35:33 -0300

LV Status available

# open 1

LV Size 39.98 GiB

Current LE 10236

Segments 4

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 252:2

--- Segments ---

Logical extents 0 to 2558:

Type linear

Physical volume /dev/sdc

Physical extents 0 to 2558

Logical extents 2559 to 5117:

Type linear

Physical volume /dev/sdb

Physical extents 0 to 2558

Logical extents 5118 to 7676:

Type linear

Physical volume /dev/sdd

Physical extents 0 to 2558

Logical extents 7677 to 10235:

Type linear

Physical volume /dev/sde

Physical extents 0 to 2558

--- Logical volume ---

LV Path /dev/vg01/oradata

LV Name oradata

VG Name vg01

LV UUID 2A05vP-mAGA-Dl1G-03IK-uys0-ndlc-irJIbj

LV Write Access read/write

LV Creation host, time srv01, 2023-03-28 10:01:03 -0300

LV Status available

# open 1

LV Size 39.98 GiB

Current LE 10236

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 252:3

--- Segments ---

Logical extents 0 to 10235:

Type striped

Stripes 4

Stripe size 64.00 KiB

Stripe 0:

Physical volume /dev/sdf

Physical extents 0 to 2558

Stripe 1:

Physical volume /dev/sdg

Physical extents 0 to 2558

Stripe 2:

Physical volume /dev/sdh

Physical extents 0 to 2558

Stripe 3:

Physical volume /dev/sdi

Physical extents 0 to 2558Dependência dos discos de acordo com o LVM criado:

[root@srv01 ~]# dmsetup deps /dev/vg01/oradata

4 dependencies : (8, 128) (8, 112) (8, 96) (8, 80)

[root@srv01 ~]#

[root@srv01 ~]# dmsetup deps /dev/vg00/data

4 dependencies : (8, 64) (8, 48) (8, 16) (8, 32)Listando todos os discos e informações:

[root@srv01 ~]# lsblk --paths -o NAME,KNAME,FSTYPE,LABEL,MOUNTPOINT,SIZE,OWNER,GROUP,MODE,ALIGNMENT,MIN-IO,OPT-IO,PHY-SEC,LOG-SEC,ROTA,SCHED,RQ-SIZE,WSAME

NAME KNAME FSTYPE LABEL MOUNTPOINT SIZE OWNER GROUP MODE ALIGNMENT MIN-IO OPT-IO PHY-SEC LOG-SEC ROTA SCHED RQ-SIZE WSAME

/dev/sdf /dev/sdf LVM2_member 10G root disk brw-rw---- 0 512 0 512 512 1 mq-deadline 254 0B

└─/dev/mapper/vg01-oradata /dev/dm-2 xfs /oradata 40G root disk brw-rw---- 0 65536 262144 512 512 1 128 0B

/dev/sdd /dev/sdd LVM2_member 10G root disk brw-rw---- 0 512 0 512 512 1 mq-deadline 254 0B

└─/dev/mapper/vg00-data /dev/dm-3 xfs /data 40G root disk brw-rw---- 0 512 0 512 512 1 128 0B

/dev/sdb /dev/sdb LVM2_member 10G root disk brw-rw---- 0 512 0 512 512 1 mq-deadline 254 0B

└─/dev/mapper/vg00-data /dev/dm-3 xfs /data 40G root disk brw-rw---- 0 512 0 512 512 1 128 0B

/dev/sdi /dev/sdi LVM2_member 10G root disk brw-rw---- 0 512 0 512 512 1 mq-deadline 254 0B

└─/dev/mapper/vg01-oradata /dev/dm-2 xfs /oradata 40G root disk brw-rw---- 0 65536 262144 512 512 1 128 0B

/dev/sr0 /dev/sr0 iso9660 OL-7.9 Server.x86_64 4.5G root cdrom brw-rw---- 0 2048 0 2048 2048 1 mq-deadline 2 0B

/dev/sdg /dev/sdg LVM2_member 10G root disk brw-rw---- 0 512 0 512 512 1 mq-deadline 254 0B

└─/dev/mapper/vg01-oradata /dev/dm-2 xfs /oradata 40G root disk brw-rw---- 0 65536 262144 512 512 1 128 0B

/dev/sde /dev/sde LVM2_member 10G root disk brw-rw---- 0 512 0 512 512 1 mq-deadline 254 0B

└─/dev/mapper/vg00-data /dev/dm-3 xfs /data 40G root disk brw-rw---- 0 512 0 512 512 1 128 0B

/dev/sdc /dev/sdc LVM2_member 10G root disk brw-rw---- 0 512 0 512 512 1 mq-deadline 254 0B

└─/dev/mapper/vg00-data /dev/dm-3 xfs /data 40G root disk brw-rw---- 0 512 0 512 512 1 128 0B

/dev/sda /dev/sda 100G root disk brw-rw---- 0 512 0 512 512 1 mq-deadline 254 0B

├─/dev/sda2 /dev/sda2 LVM2_member 99G root disk brw-rw---- 0 512 0 512 512 1 mq-deadline 254 0B

│ ├─/dev/mapper/ol-swap /dev/dm-1 swap [SWAP] 8G root disk brw-rw---- 0 512 0 512 512 1 128 0B

│ └─/dev/mapper/ol-root /dev/dm-0 xfs / 91G root disk brw-rw---- 0 512 0 512 512 1 128 0B

└─/dev/sda1 /dev/sda1 xfs /boot 1G root disk brw-rw---- 0 512 0 512 512 1 mq-deadline 254 0B

/dev/sdh /dev/sdh LVM2_member 10G root disk brw-rw---- 0 512 0 512 512 1 mq-deadline 254 0B

└─/dev/mapper/vg01-oradata /dev/dm-2 xfs /oradata 40G root disk brw-rw---- 0 65536 262144 512 512 1 128 0B

Ao utilizar o LVM com a opção linear, a escrita nos discos será sequencial, já ao utilizar o LVM Striped iremos obter a maior performance ao ter o balanceamento de leitura e escreita entre os 4 discos.

Finalizamos o post sobre os dois tipos de LVM que podemos usar, abaixo deixo a imagem bem interessante sobre a utilização do LVM linear e Striped e os ganhos de IOPS, agradeço ao Gustavo (Guga) de Souza por disponibilizar esse guia.

Links:

https://docs.oracle.com/en/learn/ol-lvm/

https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html/logical_volume_manager_administration/stripe_create_ex